Modular

A Fast, Scalable Gen AI Inference Platform

IvaraX Analysis

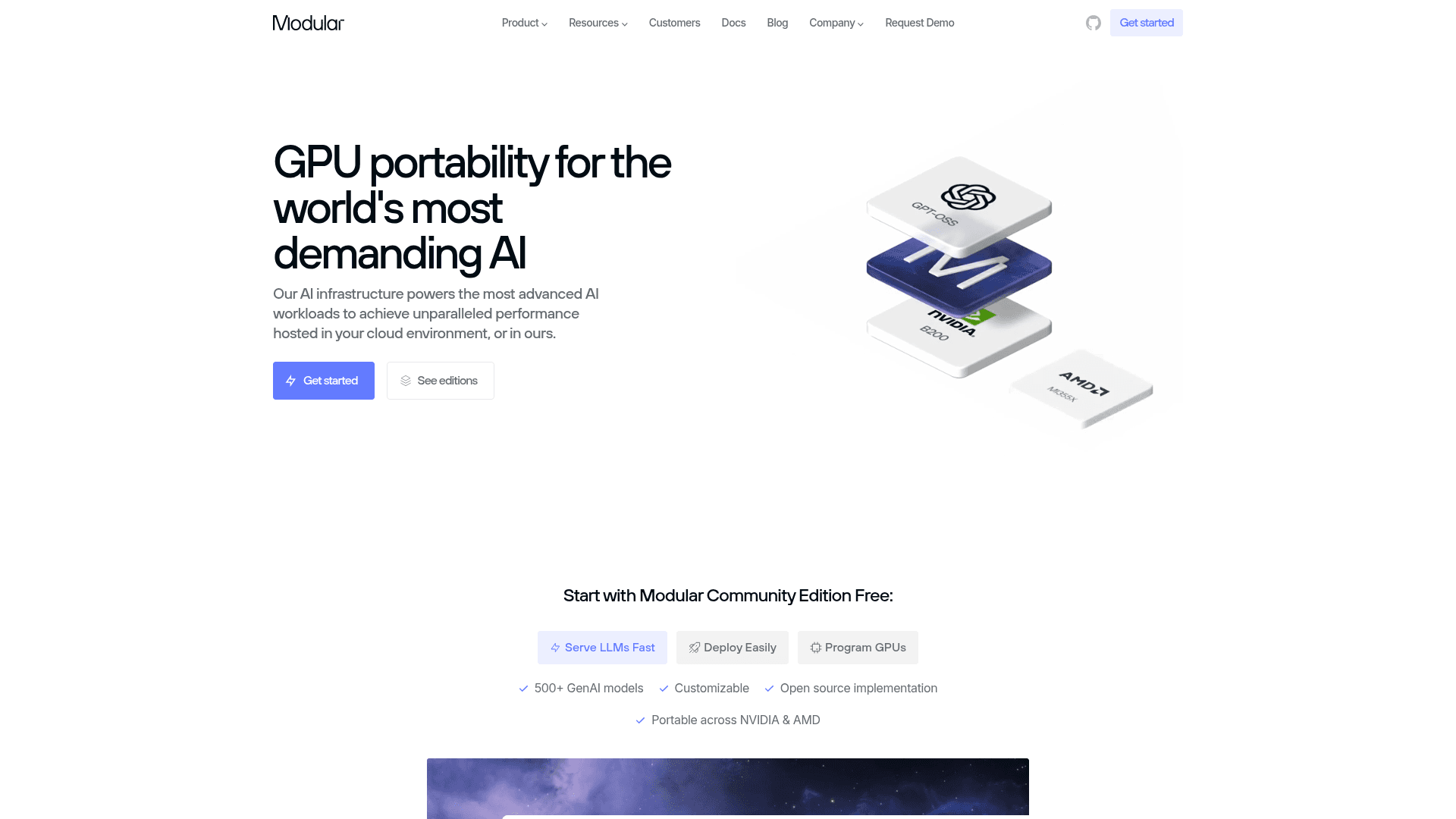

Modular offers a vertically integrated AI infrastructure platform that combines a custom programming language (Mojo), serving framework (MAX), and scaling solution (Mammoth) to deliver high-performance AI inference across multiple hardware platforms. The company has demonstrated significant cost and latency improvements through documented customer case studies, positioning itself as a compelling alternative to traditional CUDA-dependent AI stacks.

Key Strengths

- +Documented performance gains with specific customer testimonials showing 70-80% cost reductions and significant latency improvements

- +Hardware-agnostic approach enabling deployment across NVIDIA and AMD without code changes

- +Extremely lightweight deployment with 90% smaller containers compared to vLLM

- +Strong enterprise partnerships including AWS, NVIDIA, and AMD validation

- +Open-source commitment with full stack transparency and community-driven development

Ideal For

- →Enterprises seeking to reduce AI inference costs while maintaining or improving performance

- →Organizations requiring multi-cloud or multi-GPU vendor flexibility to avoid lock-in

- →AI teams looking for production-ready serving infrastructure with minimal deployment complexity

- →Companies building latency-sensitive AI applications like real-time text-to-speech or conversational AI

Things to Consider

- !Mojo language, while Python-compatible, represents a newer ecosystem that may require team learning investment

- !Enterprise pricing and SLA details require direct engagement with sales team

- !Organizations heavily invested in CUDA-specific optimizations may need to evaluate migration effort

About Modular

Modular is a next-generation AI infrastructure company founded by world-class engineers to democratize high-performance AI development and deployment. The company has built a comprehensive platform that includes MAX Framework for GenAI serving, the Mojo programming language for optimal GPU and CPU performance, and Mammoth for intelligent scaling across clusters. Their technology enables organizations to achieve unprecedented performance while maintaining hardware portability across NVIDIA and AMD GPUs. The platform is designed to address the fundamental challenges of AI infrastructure, including vendor lock-in, complex deployment pipelines, and performance optimization. Modular's vertically integrated approach—from compiler technology to orchestration—allows developers to write code once and deploy anywhere, with automatic optimization for any hardware target. The company has demonstrated significant real-world impact, with customers reporting up to 80% reduction in inference costs and 70% improvements in latency compared to traditional solutions. Modular serves enterprises ranging from fast-growing AI startups to major cloud providers like AWS, with partnerships spanning NVIDIA and AMD. Their commitment to open source, evidenced by their decision to open-source their entire stack, reflects a mission to make blazing-fast AI accessible to developers everywhere.

Why Choose Modular

- Proven 60-80% cost reduction in AI inference workloads with documented case studies from production deployments

- True hardware portability across NVIDIA and AMD GPUs without rewriting code, eliminating vendor lock-in

- 90% smaller containers than competitors like vLLM, enabling sub-second cold starts and reduced infrastructure costs

- Vertically integrated stack from Mojo language to MAX serving to Mammoth orchestration for end-to-end optimization

- Support for 500+ open GenAI models with OpenAI-compatible API for seamless integration

Services

GenAI Inference PlatformAI InfrastructureGPU ProgrammingAI Model ServingMulti-cloud Deployment

Technologies

MojoMAX FrameworkMammothNVIDIA GPUAMD GPUCUDAROCmKubernetesDockerMLIR

Tech Stack(detected from website)

Mojo Programming LanguageMLIR (Multi-Level Intermediate Representation)Docker/KubernetesNVIDIA CUDAAMD ROCmOpenAI-compatible APIHugging Face IntegrationTensorCore Operations

Industries Served

Notable Clients

InworldSan Francisco ComputeQwerky AIAWSNVIDIAAMD

Categories

Company Info

- Headquarters

- US